In my next projects, I will be working with Langflow, n8n and CrewAI 💡🤖

Little John is a LangChain ReAct Agent powered by Gemini LLM, designed as a Python-based voice assistant that processes natural language entirely on-device.

It uses a Push-to-Talk system for activation, Whisper for local speech recognition, and a local TTS engine for responses.

Through Microdot and GPIOZero, it can interact with the physical world, for example, turning on LEDs or executing other IoT actions.

Technologies used:

A simple web application created with Streamlit that demonstrates the functioning of RAG (Retrieval Augmented Generation). The app uses LlamaIndex to load and query content from Wikipedia pages related to Artificial Intelligence and Machine Learning, providing answers based on the retrieved context.

Technologies used:

A web application for the efficient management of personnel in small and medium-sized enterprises.

The system centralizes and automates key HR processes, including employee management,

attendance monitoring, leave management, and payroll processing.

It has a login, a registration system, authentication and also offers advanced features

such as performance report generation and a notification system for corporate communications.

Technologies used:

Main Features:

Cybershop is a fictional business management app set in the year 2079. Its purpose is to sell cybernetic prostheses to be displayed in the app through augmented reality or in 3D in the form of STL files, a CAD format, which once purchased can be printed at home using a 3D printer enabled for printing biocompatible implants and electronic circuits.

The project and demo were created for the subject Mobile Programming.

Technologies and Services Used:

Images and texts were generated with Scribble Diffusion and ChatGPT. The demo is functional and responsive.

Repository and presentation available in PDF.

Go to the pdf Go to project

The project stems from the curiosity to explore the limits of the USB and MIDI protocol on a

Novation Launchpad MK1.

Initially, through fuzzing with PyUSB and random

packets,

I managed to turn on the device's LEDs, even though it was not supported on Linux.

Subsequently, I delved into its internal workings: the Launchpad is a 9x9 grid of illuminable keys

(3 levels of red and 3 of green, combinable into yellow), which responds to MIDI commands.

I developed three Python scripts to describe the matrix, control its LEDs, and send systematic commands,

using a wrapper that leverages amidi as a subprocess.

In addition to controlling the lights, the script receives and prints the MIDI events of the pressed keys, transforming the Launchpad into an interactive interface.

In this way I was able to turn the LEDs on, off, and color them as I pleased, without official drivers, even creating visual effects with random packets and instant shutdown sequences.

Go to video Go to project on GitHub Go to LinkedIn update

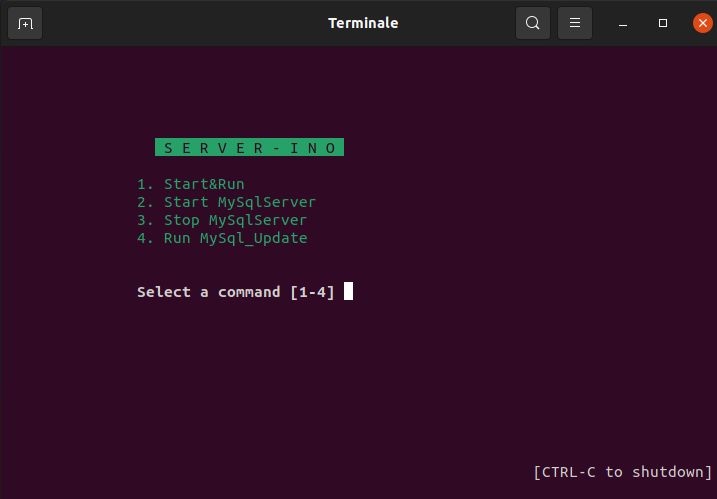

A tiny graphical interface for LAMPP, written in Bash and tput (mini graphical library). Its purpose is to start a local server without using the command line.

Go to project

Johnny The CyberCar Assistant is a proof of concept (PoC) of a fully local RAG-enabled AI agent grafted onto an Opel Corsa B from 1997, the result of my bachelor's thesis in computer engineering at the University of Catania.

The project consists of 3 systems:

Inspired by

KITT from Supercar (aka Knight

Rider

)

, Johnny makes the car smart: thanks to an ESP32 connected to the CAN BUS and with a 4g modem

it enables a voice interface, long-term memory and intelligent control (via OBD-II Port) at the

edge.

The rest of the AI system is executed remotely on a server with a consumer GPU (GTX 1080TI).

The assistant is invoked by the keyword "Hey Johnny!", after which it is possible to use voice

commands

to act locally on

the car's devices (like raising the windows) or speak voice-to-voice remotely with the Chatbot, which

responds with

the context of

the car's data (GPS position, speed, temperature, etc.), without ever taking your hands off the steering

wheel.

The Qdrant vector database manages the assistant's short/long-term

memory, enabling a true RAG (Retrieval-Augmented Generation) experience.

All services are orchestrated with Docker Compose.

🎤 User voice

↓

❓ If the command is local → executes local action ⚙️

Otherwise:

↓

🐱 contacts johnny the remote chatbot

↓

🌐 Cloudflared (authentication)

↓

📝 WhisperAI (Voice2Text on GPU)

↓

🧠 LLaMA3 (Ollama, inference on GPU)

↓

🔊 Text2Speech (vocal response)

↓

❓ Loop: until it detects “stop”, returns to johnny 🐱

N.B. The project is completely open-source, it was created in about 2 and a half months,

it is designed for hardware reuse and edge computing in the automotive sector.

architecture, images, videos and full thesis in pdf (ITA ONLY) are available in the repository.

Local Whisper Cat: An official Cheshire Cat AI plugin to transcribe locally on your gpu/cpu, in Docker.

Chatty: Voice client in Python to contact the Cheshire Cat AI server.

Chattino: Arduino/C++ voice client for ESP32 to contact the Cheshire Cat AI server.

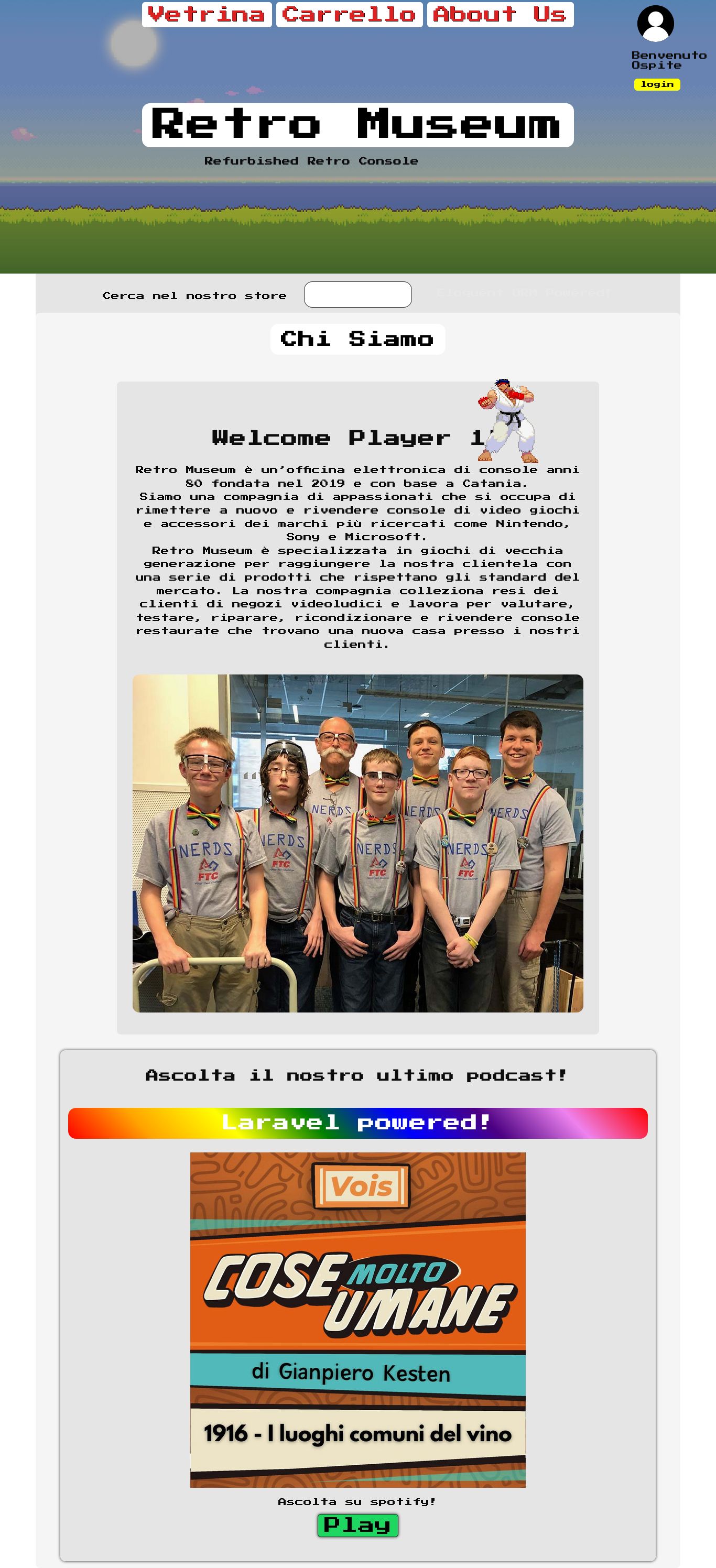

Retromuseum is a university web development project for a fake e-commerce of a fictional company from

Catania,

where users could buy used games and consoles, with a visual style heavily inspired by classic 8-bit

graphics.

Initially the backend was implemented in pure PHP,

then migrated to Laravel.

It uses a pre-populated MySQL database, calls the Spotify API (OAuth 2.0) for the latest (fake) podcast available, and uses MongoDB as a database for the cart (only to get extra points for the project).

It includes a login system, with client/server validation, a search bar and dynamically generated content via JS from rawg.io api.

The entire web app is mobile-responsive.

Go to project

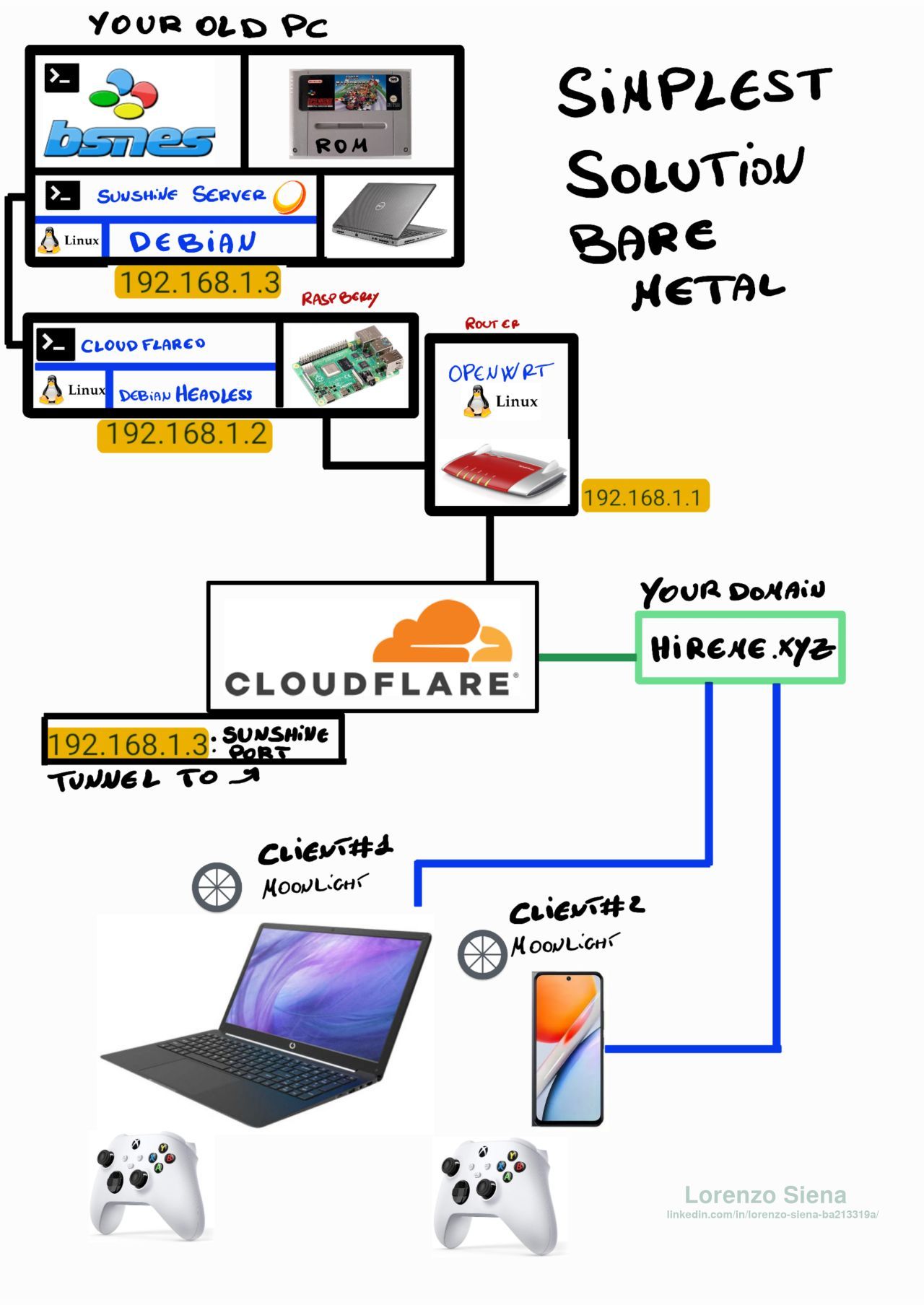

A simple local cloud gaming solution: on an old laptop it is installed Debian, an SNES emulator, and the Sunshine server. This way it's possible to stream retro games directly on the network and connect remotely with Moonlight client, without containers or virtualization.

Technologies used:

N.B. Minimal and zero-cost configuration, designed for hardware recycling.

Go to the linkedin post

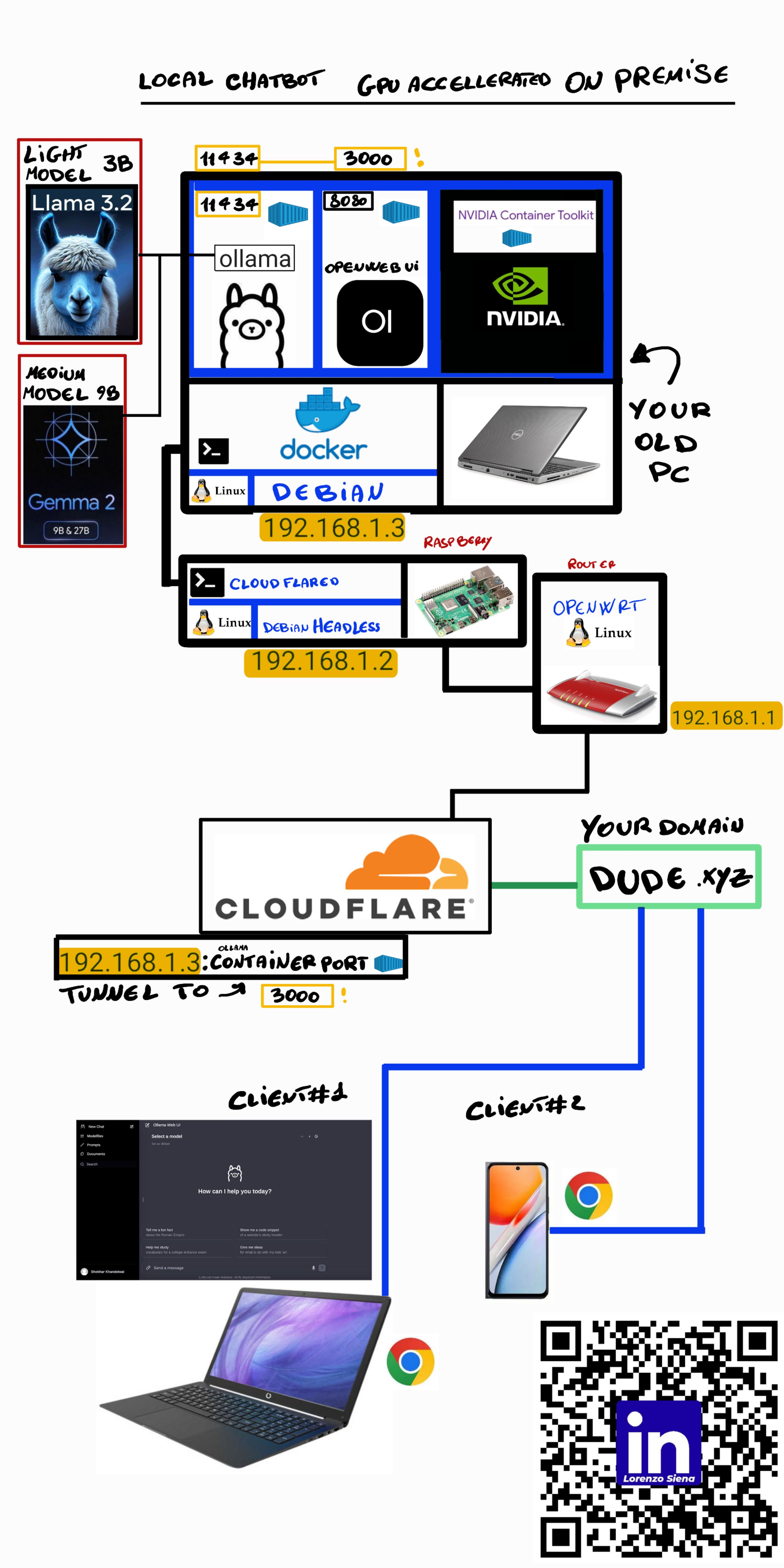

A private local chatbot project, running on an old laptop with Docker. The model (Gemma2 or LLaMA 3.2) runs in an Ollama container with a web interface via OpenWebUI, accessible on port 3000.

It's immediately available on the LAN, while a Raspberry Pi with Cloudflare Tunnel securely exposes the service even remotely, via a personalized domain (.xyz) and authentication.

Technologies used:

N.B. Configuration designed to recycle hardware and avoid e-waste.

Go to the Local Chatbot linkedin post